Here's How Instagram's New Self-Harm and Suicide Reporting System Works

Article updated July 22, 2019.

Last week Instagram released a new feature that lets users flag posts containing images or messages containing references to suicide and self-harm. In the past, flagging these images as inappropriate would result in them being deleted. Now, users whose posts have been flagged will receive a message from Instagram with support resources. The app’s latest update will also offer you a list of mental health resources if you search the app for keywords – suicide, depression, self-harm, etc. – that indicate you might need support.

Last week, when the update was announced, The Mighty found a bug that explains why this feature might not have worked for you when it was first released. Now that the bug has been fixed, we’ve created a walkthrough to show how the update works.

Reporting a Photo That Contains Suicide or Self-Harm Imagery

If you stumble across a post that leaves you concerned about a user’s mental health, you can flag the post as “self injury,” and let Instagram know you think that user needs support.

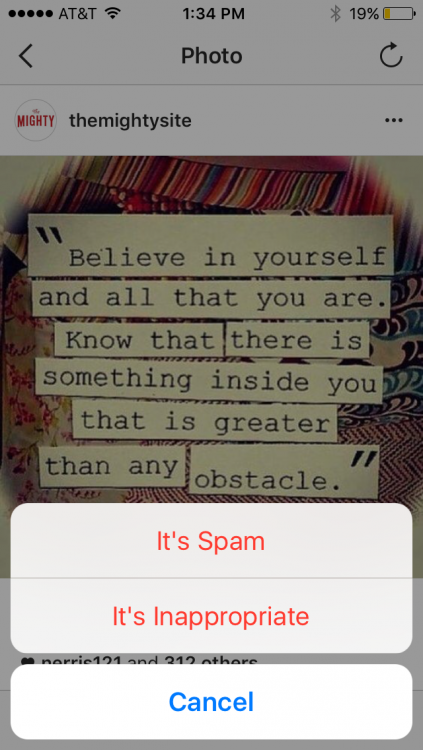

To report the photo, tap of the three dots on the right of the person’s username. You will then see an option to report the photo. After hitting report, you have two options – “It’s spam” and “It’s inappropriate.” Choose “It’s inappropriate.”

When asked why posts featuring references to suicide and self-harm were categorized under “inappropriate,” a spokesperson for Instagram told The Mighty that inappropriate is the term Instagram uses for reporting any items that are not spam.

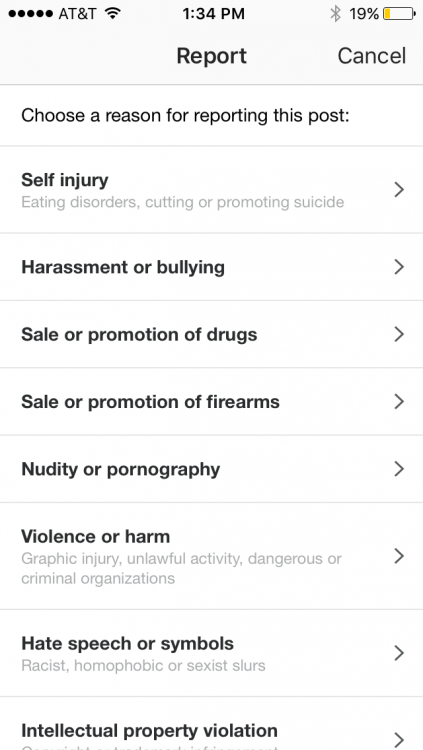

After selecting “It’s inappropriate,” you will be redirected to a menu that lists a number of reasons why a photo might be flagged. Pick the first one, “self injury.”

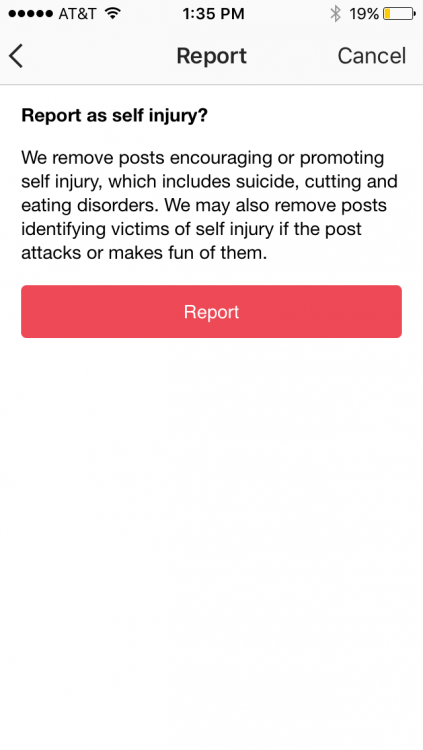

Instagram will then ask you if you want to report the post as “self injury,” explaining that the app removes posts that encourage or promote self-injury including references to suicide, cutting and eating disorders. The app also removes photos identifying people who self-injure, especially if the post attacks or makes fun of the person.

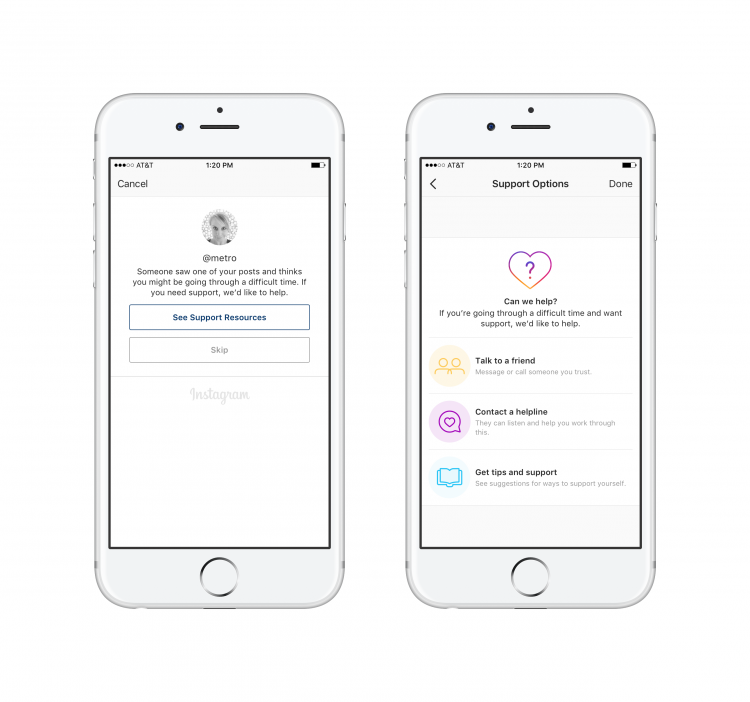

Once a post is reported, it gets sent to a team which monitors flagged posts. Posts are monitored all day, every day, the company said in a press release. If Instagram’s team agrees with your assessment, the user whose post you’ve reported will get the following message, “Someone saw your posts and thinks you might be going through a difficult time. If you need support, we’d like to help.” From there the reported user can view a list of support resources such as talking to a friend, calling a helpline and accessing a list of tips and support compiled by Instagram and the National Eating Disorders Association; Dr. Nancy Zucker, an associate professor of psychology and neuroscience at Duke University; Forefront, a University of Washington initiative studying suicide prevention innovations; National Suicide Prevention Lifeline; Save.org; Samaritans; Beyond Blue; Headspace and people with real-life experience managing mental illness.

Searching Tags With Flagged Keywords

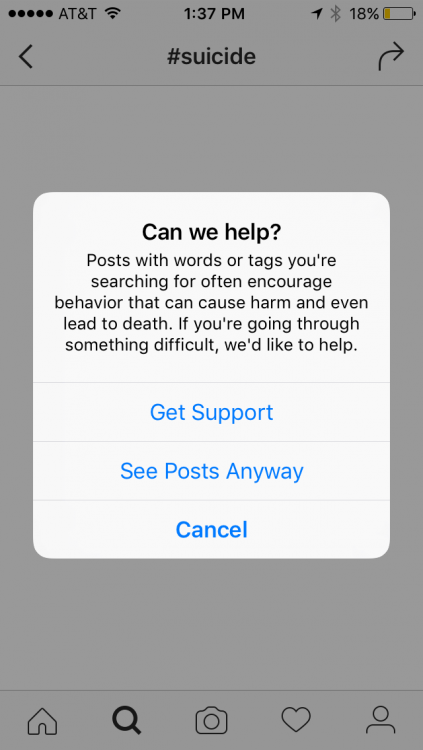

If you are searching for posts like suicide, depression, self-harm, cutting, etc. Instagram will immediately show you a dialogue box offering the same resources as users whose photos have been reported.

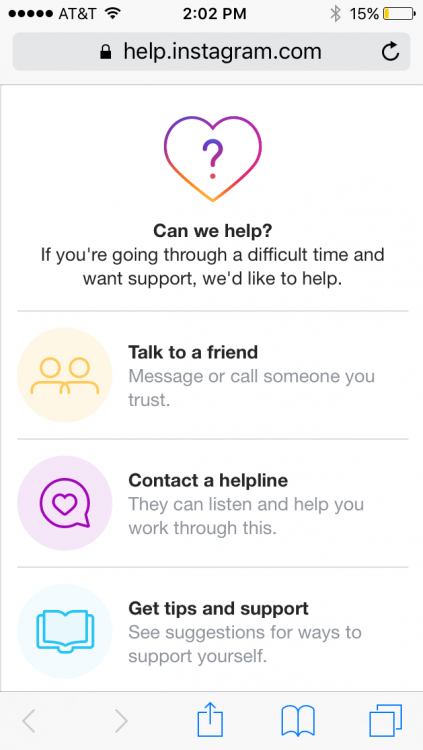

If you choose “Get Support,” you will be taken out of the Instagram app and redirected to Instagram’s website where you will see a list of support resources.

The resources and hotline information you see depends on your location. Instagram has partnerships with over 40 organizations around the world, ensuring those who need help are connected with the most appropriate resources.

If you or someone you know needs help, visit our suicide prevention resources page.

If you need support right now, call the Suicide Prevention Lifeline at 1-800-273-8255. You can reach the Crisis Text Line by texting “START” to 741-741.